Biggest Launch

Introducing Our New

API's

- Available Today

ChatGPT has quickly become one of the world's largest growing chatbots and virtual assistants. The AI tool was published by OpenAI, a tech startup founded by Sam Altman, Greg Brockman, Elon Musk, and others.

OpenAI is often dubbed as the one to start the AI Boom, especially in the field of Generative AI. The bots could reply human-like, something that set them apart from any other software available at the time.

However, today, there are many versions of the chatbot running simultaneously. Notably, the versions of 3.5, 4, and the newly launched 4o (Omni.)

The problem is, which one should you use? Which version can provide us with the most consistent results without breaking the bank? Let’s find out in this article.

In June 2020, OpenAI broke the internet with the launch of its first-ever commercially available chatbot and virtual assistant called ChatGPT-3.0. The new AI tool was significantly faster than its predecessors with 175 billion parameters operating at its backend.

By the last quarter of 2022, OpenAI came up with a reload of the 3.0 version called ChatGPT 3.5. Surprisingly, it contained fewer parameters than the 3.0 version but performed better.

This was possible due to Reinforcement Learning from Human Preferences (RLHF), a subfield of ML that learned from the user inputs. The 3.5 model narrowed down quicker to the answers and “hallucinated” less.

Thus, ChatGPT-3.5 was implemented in more Natural Language Processing (NLP) tasks than the previous models.

It was used as a development model for many online services like Hugging Face’s Python-based library to develop NLP-based models.

By March 2023, OpenAI announced a new version of the chatbot, ChatGPT 4.0 with more than 100 Trillion parameters. This version allowed multimodal input, which meant that it was now able to receive images as input and respond with it, too.

ChatGPT-4 was trained on a much larger corpus size which meant that it had more context for reasoning and problem-solving. This is why the new model easily solved complex scientific and mathematical problems, which were otherwise not possible.

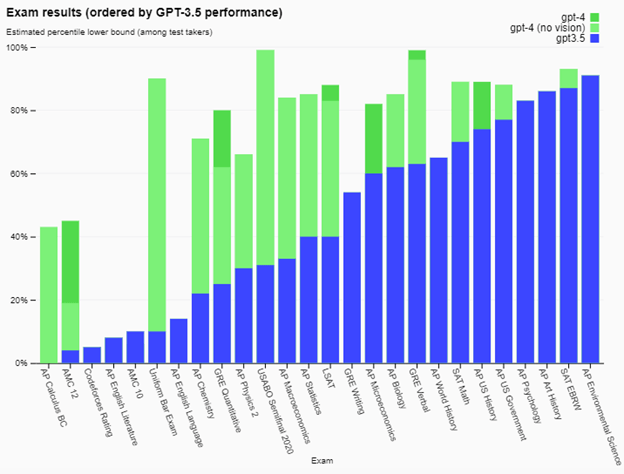

According to OpenAI, GPT-4 was 40% more likely to provide factual responses than ChatGPT-3.5. The latter model scored in the 90th percentile of the exam-takers in the Uniform Bar Exam. Versus GPT-3.5, which only managed marks in the 10th percentile of the test takers.

Hence, the options and opportunities were limitless with the new ChatGPT 4, and the quality of content generation and optimization improved surgically.

Codenamed ChatGPT-4o (Omni) is the latest flagship of the GPT family. It is by far the most advanced chatbot and virtual assistant that the company has ever released.

It hallucinates the least and provides accurate results while maintaining the lowest response times.

The ChatGPT-4o supports TTS (Text-to-speech) in over 50 languages so you can converse with it in real time. Not only this, but the new virtual assistant is also able to understand video input and interpret what’s being asked of it.

The latest model responds to voice inputs in an average time of 320 milliseconds, equivalent to humans in conversation. As a comparison, ChatGPT-4 responds to voice queries with an average latency of 5.4 seconds, while ChatGPT-3.5 at 2.8 seconds.

Such advancements from its predecessors mean that ChatGPT4o has truly just begun to scratch the surface in terms of its potential.

With all this being said, let’s see how each of the discussed models of ChatGPT stack against each other in different tests.

Also read: Mastering The Art Of Writing With Editpad & ChatGPT

So far, we have discussed the key features of each model and discussed basic distinguishing factors between them.

Now, it’s time to check the three versions side-by-side with various types of tasks, including pricing. This will help us determine which model is the best and for what purpose we shall use them.

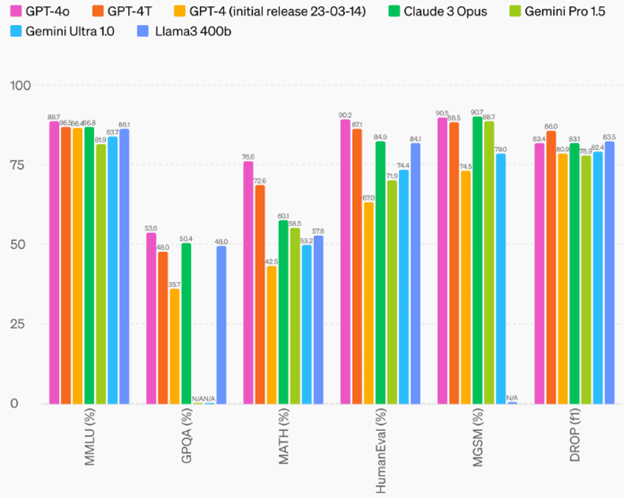

OpenAI checked ChatGPT-4, and ChatGPT-4o on many different datasets. They also included other LLMs in the results for reference. Below is the screenshot of what they were able to produce:

Source: OpenAI-4o

We can see that GPT-4o has the highest accuracy for a wide range of tasks on datasets.

The testing also shows another type of ChatGPT model, called ChatGPT-4 Turbo (4T for short.)

This version of ChatGPT was developed months before GPT4 Omni came out and was specifically reserved for developers. The GPT 4T has a more recent training dataset than 4o but suffers from being more expensive and slower than the Omni model (more about this later.)

Regardless, this test makes 4o the most suitable for a wide range of tasks including solving mathematical equations, reasoning, coding, classifying data, and much more.

It also made 4o the kingpin in terms of working with multilingual audio and video data and that too with great speeds.

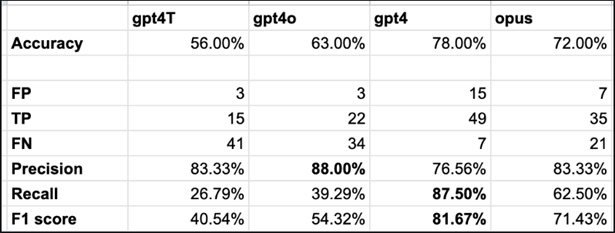

Next, we want to discuss a text classification test between ChatGPT-4, 4o, 4 Turbo, and Claude Opus 3.

This test was conducted by Vellum.ai on a customer support ticket dataset. The data was a collection of spreadsheets with Boolean outputs (ticket resolved – yes or no) and checked the ability of the models to accurately ‘recall’ information.

Source: Vellum.ai

Surprisingly, GPT-4o isn’t the clear winner here. The old GPT-4 came out as the winner in terms of the accuracy of the outputs.

However, we can see that GPT-4o got the highest precision. This means that 4o can be used for complex comparative analysis reliably without getting any false positives or hallucinations.

By now, you must’ve guessed that GPT-t3.5 isn’t anywhere near the working efficiency or power of GPT-4, 4o, or 4T. However, for the sake of this document, we’ll include a direct comparison between 3.5 and the base version gpt4.0 to see their differences.

Source: OpenAI Research

These results for the academic scoring were very much predictable as ChatGPT 3.5 has way fewer training parameters than GPT-4, and GPT4 vision.

Thus, imagine the gap between GPT-4 Omni and GPT-3.5, must be massive, no?

By suitability, we mean, which one you shall use and when. We’ve already taken a deep look at each model’s performance and how they fair against each other in different tasks.

Now, to understand suitability, we must first understand the term token size. When it comes to the AI models, they churn up textual prompts as ‘tokens’ or small packets of characters. Roughly estimating, one token equals 4 characters or 0.75 words.

OpenAI measures the capabilities of their AI models using the token sizes. The more the size, the lengthier the prompts can be with a full contextual understanding of each word given. However, how much of each word is weighed out in the output is still a major talking point among researchers.

Anyhow, below are the token sizes for the prompts for ChatGPT 3.5, 4, 4o, and 4T versions.

| Model | Contextual Window | Training Dataset |

| GPT-4o | 128,000 tokens | Up to Oct 2023 |

| GPT-4-turbo | 128,000 tokens | Up to Dec 2023 |

| GPT-4-32k | 32,768 tokens | Up to Sep 2021 |

| GPT-3.5-turbo-0125 | 16,385 tokens | Up to Sep 2021 |

Source: OpenAI Models

Please note that there are multiple versions of GPT-4 and 3.5 available, however, we have only chosen the given ones for comparison. All the above models are paid and currently available from the OpenAI platform as of the date of writing this article.

However, the free version, ChatGPT 3.5 (basic) only provides a contextual window of 4,096 tokens. This is way less than what we are seeing in the table above.

But, provided that day-to-day tasks don’t require extensive prompting, this should be enough for general users.

Yet, there’s no way app developers and enthusiasts are going to be satisfied with a token size of 4,096.

Thus, we recommend using the GPT-4o or GPT-4T model. The Omni model possesses a wide capacity for understanding user words and can give precise answers.

This means that you could input a ton of spreadsheets, Word files, coding scriptures, etc., and the 4o model would still perfectly understand each line and answer your question.

Still, if your work purpose requires you to get responses on the latest discoveries and developments in the world. Then, ChatGPT-4 Turbo might be a better option for you as it has a more updated dataset than any other model in the market.

Hence, it all comes down to your priorities and how you want to recreate the famous chatbot.

Last, but not the least, we must consider the pricing of all the models we’ve discussed in our article. Money is a huge factor for many developers and should be considered by everyone before making any selections.

Below are the ‘token-wise’ prices from OpenAI, and how each model compares with one another.

| Model | Input | Output |

| GPT-4o | $5.00 /1M tokens | $15.00 /1M tokens |

| GPT-4o-2024-05-13 | $5.00 /1M tokens | $15.00 /1M tokens |

| GPT-4-turbo | $10.00 /1M tokens | $30.00 /1M tokens |

| GPT-4-turbo-2024-04-09 | $10.00 /1M tokens | $30.00 /1M tokens |

| GPT-4 | $30.00 /1M tokens | $60.00 /1M tokens |

| GPT-4-32k | $60.00 /1M tokens | $120.00 /1M tokens |

| GPT-3.5-turbo-0125 | $0.50 /1M tokens | $1.50 /1M tokens |

| GPT-3.5-turbo-instruct | $1.50 /1M tokens | $2.00 /1M tokens |

Source: OpenAI Pricing

Since the replies from the OpenAI’s virtual assistants are unpredictable (unsupervised learning), outputs have different token-wise prices than inputs.

As evident, the cheapest option of them all is ChatGPT 3.5-Turbo. The plan starts at $0.50/1 Million tokens as input and $1.50/ 1 Million tokens as output.

But, don’t confuse this model with the freely available ChatGPT 3.5. The turbo version is paid and specifically reserved for developers in the OpenAI playground.

Therefore, if you’re on a budget, pick the 3.5 Turbo option. It will provide you with a lot of decent answers at a very nominal price.

However, developing AI tools for scientific studies, academic research, etc., may require you to get the most advanced AI models with updated knowledge.

This leaves us with ChatGPT-4 Turbo as the viable option. Yet, GPT-4T is expensive. Not every firm/individual can afford such hefty costs of inputs and outputs.

So, our recommendation would be to pick the new GPT-4o model. It has a perfect balance of updated datasets and nominal pricing. It also performs a wide range of tasks with high precision and has more promise of improvements than other models in the future.

In any case, we can’t recommend using the base 4.0 version, or the 4-32k version of ChatGPT. It is extremely costly, lower in accuracy and precision, and the database isn’t updated either.

Sure, you might be compromising on some of the high-end features that ChatGPT-4 possesses. But, unless your work is something other than textual, we still prefer using the ChatGPT-3.5 Turbo for the tasks.

In this post, we explored the differences between the three most popular ChatGPT versions currently in the market. Those are ChatGPT-3.5, ChatGPT-4, and ChatGPT-4o (Omni).

First, we saw the detailed features of each model and tried to understand their stand-out features. Then, we did a detailed comparative analysis on three different criteria: performance, suitability, and price.

It was found that ChatGPT-4o performs the best out of all three. Another model, called ChatGPT-4 Turbo came quite close to it in performance. However, it lost its battle due to being more expensive than the 4o version.

That’s it for the post! We hope you enjoyed reading our content!